The Measured Hallucination

What it is

The Measured Hallucination is when GenAI invents facts, names, or events that sound real, and presents them in a thoughtful, considered way. It can be more deceptive than the (over)confident hallucination.

Why it happens

Conversational AIs predict the most likely next words based on your prompt. If your question is vague, you might get something wildly off-base. But even with a clear, sensible query, the model can still slip into hallucination, especially when it’s trying to be helpful.

In a measured hallucination, the model wraps its guesswork in caution: “There isn’t a clear answer, but here’s what I found...” It sounds thoughtful, even responsible. But under the hood, it’s still making things up based on patterns, not facts. It’s not grounded in evidence, it’s grounded in what sounds plausible because it’s designed to sound plausible.

What it looks like

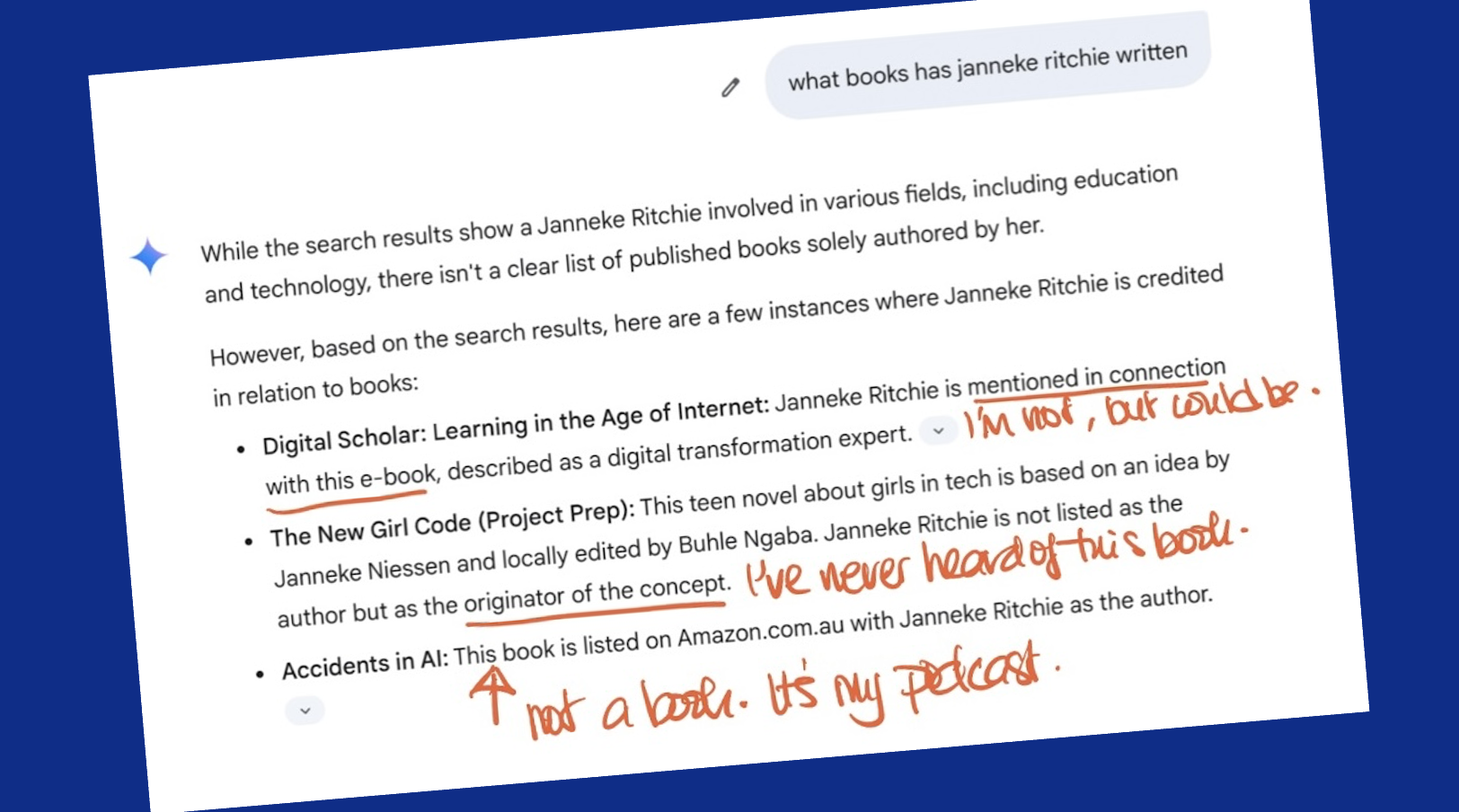

I asked GenAI “what books has Janneke Ritchie written”. I expected a disclaimer or a redirect to a web search. What I got was much subtler, and more deceptive.

Make it stand out

Why it matters

The measured hallucination can be especially misleading because it sounds thoughtful while making things up. These responses feel trustworthy not in spite of the hedging, but because of it. The AI speaks like someone who just Googled you, skimmed the headlines, and filled in the blanks.

How to catch it

These are not easy to catch. Verify anything (everything) you plan to rely on.

Try it yourself

Ask your GenAI what books you have written or something else you already know the answer to. Assess the response. Is it accurate? Accurate but lacking specifics? Close but kind of off (like my podcast for instance)?

😎 Pro Tip

Don’t just go based on what you think you know. Do your own research. Why do you think the AI generated this response? Understanding why AI deceives will help you use its outputs to better advantage.

Have a question? Want to share your experience? Email me